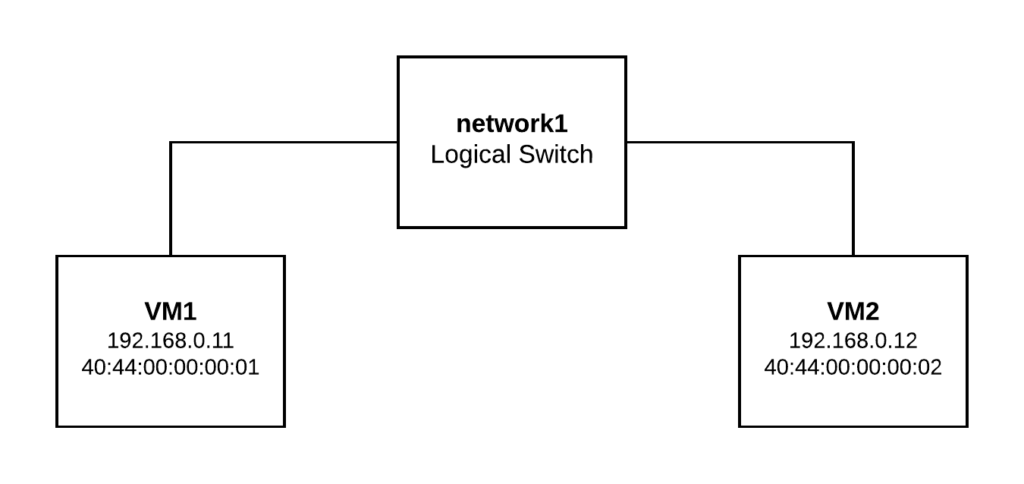

In the last post we covered how OVN distributes the pipeline execution across the different hypervisors thanks to the encapsulation used. Using the same system as a base, let’s create a Logical Router and a second Logical Switch where we can see how routing works between different nodes.

ovn-nbctl ls-add network1

ovn-nbctl ls-add network2

ovn-nbctl lsp-add network1 vm1

ovn-nbctl lsp-add network2 vm2

ovn-nbctl lsp-set-addresses vm1 "40:44:00:00:00:01 192.168.0.11"

ovn-nbctl lsp-set-addresses vm2 "40:44:00:00:00:02 192.168.1.11"

ovn-nbctl lr-add router1

ovn-nbctl lrp-add router1 router1-net1 40:44:00:00:00:03 192.168.0.1/24

ovn-nbctl lsp-add network1 net1-router1

ovn-nbctl lsp-set-addresses net1-router1 40:44:00:00:00:03

ovn-nbctl lsp-set-type net1-router1 router

ovn-nbctl lsp-set-options net1-router1 router-port=router1-net1

ovn-nbctl lrp-add router1 router1-net2 40:44:00:00:00:04 192.168.1.1/24

ovn-nbctl lsp-add network2 net2-router1

ovn-nbctl lsp-set-addresses net2-router1 40:44:00:00:00:04

ovn-nbctl lsp-set-type net2-router1 router

ovn-nbctl lsp-set-options net2-router1 router-port=router1-net2

And then on Worker1 and Worker2 respectively, let’s bind VM1 and VM2 ports inside network namespaces:

# Worker1

ovs-vsctl add-port br-int vm1 -- set Interface vm1 type=internal -- set Interface vm1 external_ids:iface-id=vm1

ip netns add vm1

ip link set vm1 netns vm1

ip netns exec vm1 ip link set vm1 address 40:44:00:00:00:01

ip netns exec vm1 ip addr add 192.168.0.11/24 dev vm1

ip netns exec vm1 ip link set vm1 up

ip netns exec vm1 ip route add default via 192.168.0.1

# Worker2

ovs-vsctl add-port br-int vm2 -- set Interface vm2 type=internal -- set Interface vm2 external_ids:iface-id=vm2

ip netns add vm2

ip link set vm2 netns vm2

ip netns exec vm2 ip link set vm2 address 40:44:00:00:00:02

ip netns exec vm2 ip addr add 192.168.1.11/24 dev vm2

ip netns exec vm2 ip link set vm2 up

ip netns exec vm2 ip route add default via 192.168.1.1

First, let’s check connectivity between two VMs through the Logical Router:

[root@worker1 ~]# ip netns exec vm1 ping 192.168.1.11 -c2

PING 192.168.1.11 (192.168.1.11) 56(84) bytes of data.

64 bytes from 192.168.1.11: icmp_seq=1 ttl=63 time=0.371 ms

64 bytes from 192.168.1.11: icmp_seq=2 ttl=63 time=0.398 ms

--- 192.168.1.11 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.371/0.384/0.398/0.023 ms

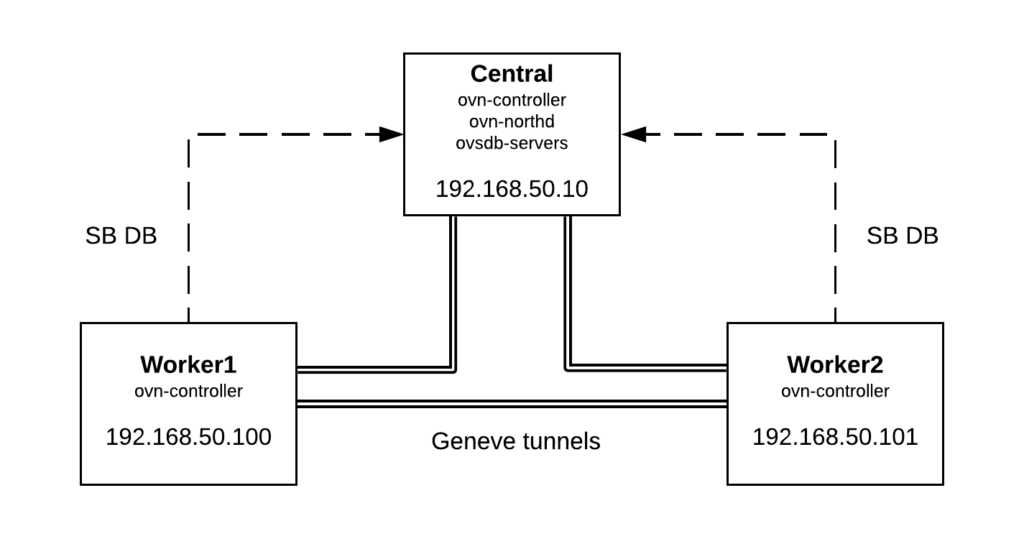

ovn-trace

Let’s now check with ovn-trace how the flow of a packet from VM1 to VM2 looks like and then we’ll verify how this gets realized in our physical deployment with 3 hypervisors:

[root@central ~]# ovn-trace --summary network1 'inport == "vm1" && eth.src == 40:44:00:00:00:01 && eth.dst == 40:44:00:00:00:03 && ip4.src == 192.168.0.11 && ip4.dst == 192.168.1.11 && ip.ttl == 64'

# ip,reg14=0x1,vlan_tci=0x0000,dl_src=40:44:00:00:00:01,dl_dst=40:44:00:00:00:03,nw_src=192.168.0.11,nw_dst=192.168.1.11,nw_proto=0,nw_tos=0,nw_ecn=0,nw_ttl=64

ingress(dp="network1", inport="vm1") {

next;

outport = "net1-router1";

output;

egress(dp="network1", inport="vm1", outport="net1-router1") {

output;

/* output to "net1-router1", type "patch" */;

ingress(dp="router1", inport="router1-net1") {

next;

ip.ttl--;

reg0 = ip4.dst;

reg1 = 192.168.1.1;

eth.src = 40:44:00:00:00:04;

outport = "router1-net2";

flags.loopback = 1;

next;

eth.dst = 40:44:00:00:00:02;

next;

output;

egress(dp="router1", inport="router1-net1", outport="router1-net2") {

output;

/* output to "router1-net2", type "patch" */;

ingress(dp="network2", inport="net2-router1") {

next;

outport = "vm2";

output;

egress(dp="network2", inport="net2-router1", outport="vm2") {

output;

/* output to "vm2", type "" */;

};

};

};

};

};

};

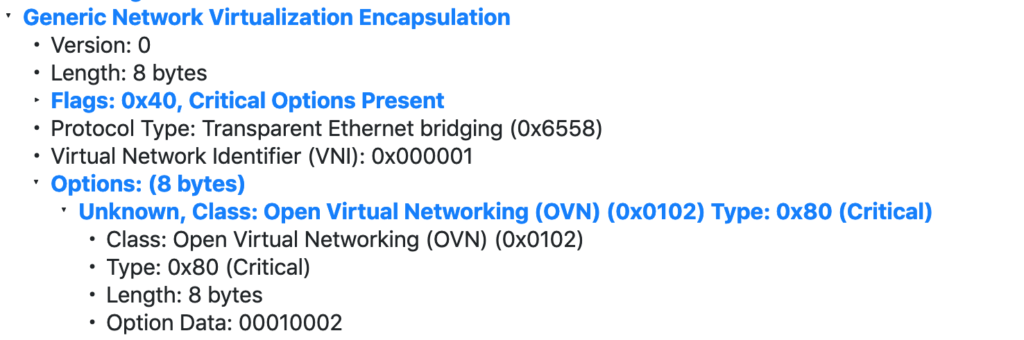

As you can see, the packet goes through 3 OVN datapaths: network1, router1 and network2. As VM1 is on Worker1 and VM2 is on Worker2, the packet will traverse the tunnel between both hypervisors and thanks to the Geneve encapsulation, the execution pipeline will be distributed:

- Worker1 will execute both ingress and egress pipelines of network1.

- Worker1 will also perform the routing (lines 10 to 28 above) and the ingress pipeline for network2. Then the packet will be pushed to Worker2 via the tunnel.

- Worker2 will execute the egress pipeline for network2 delivering the packet to VM2.

Let’s launch a ping from VM1 and check the Geneve traffic on Worker2:

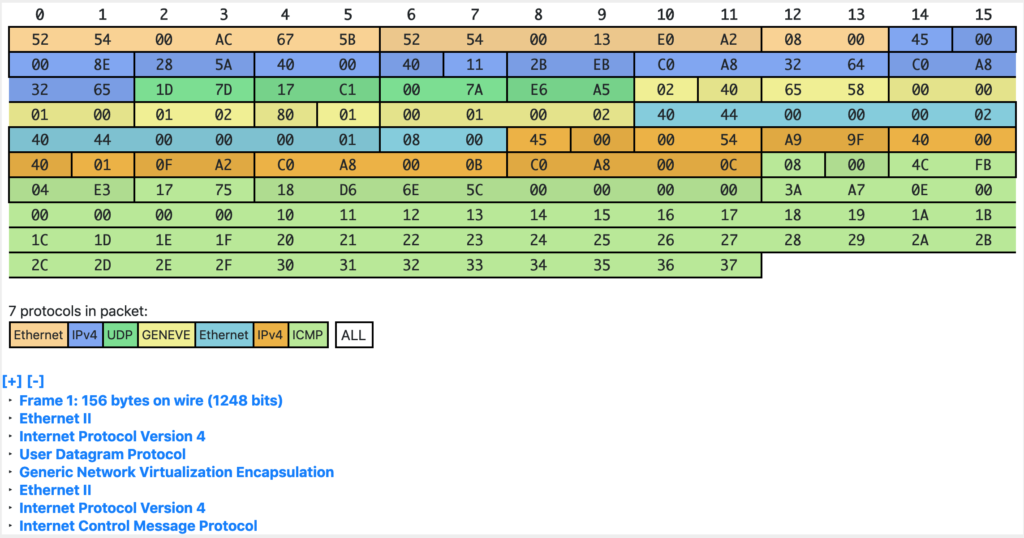

52:54:00:13:e0:a2 > 52:54:00:ac:67:5b, ethertype IPv4 (0x0800), length 156: (tos 0x0, ttl 64, id 46587, offset 0, flags [DF], proto UDP (17), length 142)

192.168.50.100.55145 > 192.168.50.101.6081: [bad udp cksum 0xe6a5 -> 0xb087!] Geneve, Flags [C],

vni 0x8,

proto TEB (0x6558),

options [class Open Virtual Networking (OVN) (0x102)

type 0x80(C) len 8

data 00020001]

From what we learnt in the previous post, when the packet arrives to Worker2, the egress pipeline of Datapath 8 (VNI) will be executed being ingress port = 2 and egress port = 1. Let’s see if it matches what ovn-trace gave us earlier:

egress(dp=”network2″, inport=”net2-router1″, outport=”vm2″)

[root@central ~]# ovn-sbctl get Datapath_Binding network2 tunnel_key

8

[root@central ~]# ovn-sbctl get Port_Binding net2-router1 tunnel-key

2

[root@central ~]# ovn-sbctl get Port_Binding vm2 tunnel-key

1

What about the reply from VM2 to VM1? If we check the tunnel traffic on Worker2 we’ll see the ICMP echo reply packets with different datapath and ingress/egress ports:

22:19:03.896340 52:54:00:ac:67:5b > 52:54:00:13:e0:a2, ethertype IPv4 (0x0800), length 156: (tos 0x0, ttl 64, id 30538, offset 0, flags [DF], proto UDP (17), length 142)

192.168.50.101.13530 > 192.168.50.100.6081: [bad udp cksum 0xe6a5 -> 0x5419!] Geneve, Flags [C],

vni 0x7,

proto TEB (0x6558),

options [class Open Virtual Networking (OVN) (0x102)

type 0x80(C) len 8

data 00020001]

[root@central ~]# ovn-sbctl get Datapath_Binding network1 tunnel_key

7

[root@central ~]# ovn-sbctl get Port_Binding net1-router1 tunnel-key

2

[root@central ~]# ovn-sbctl get Port_Binding vm1 tunnel-key

1

As we can see, the routing happens locally in the source node; there’s no need to send the packet to any central/network node as the East/West routing is always distributed with OVN. In the case of North/South traffic, where SNAT is required, traffic will go to the gateway node but we’ll cover this in coming posts.

OpenFlow analysis

Until now, we just focused on the Logical elements but ovn-controller will ultimately translate the Logical Flows into physical flows on the OVN bridge. Let’s inspect the ports that our bridge has on Worker2:

[root@worker2 ~]# ovs-ofctl show br-int

OFPT_FEATURES_REPLY (xid=0x2): dpid:0000404400000002

n_tables:254, n_buffers:0

capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP

actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst

1(ovn-centra-0): addr:ea:64:c2:a9:86:fe

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

2(ovn-worker-0): addr:de:96:64:2b:21:4a

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

8(vm2): addr:40:44:00:00:00:02

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

LOCAL(br-int): addr:40:44:00:00:00:02

config: PORT_DOWN

state: LINK_DOWN

speed: 0 Mbps now, 0 Mbps max

OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

We have 3 ports in the OVN bridge:

- ovn-centra-0: Tunnel port with Central node

- ovn-worker-0: Tunnel port with Worker1

- vm2: Our fake virtual machine that we bound to Worker2

When ICMP echo request packets are coming from VM1, we expect them to be arriving via port 2 and being delivered to VM2 on port 8. We can check this by inspecting the flows at table 0 (input) and table 65 (output) for br-int. For a more detailed information of the OpenFlow tables, see ovn-architecture(7) document, section “Architectural Physical Life Cycle of a Packet”.

[root@worker2 ~]# ovs-ofctl dump-flows br-int table=0 | grep idle_age='[0-1],'

cookie=0x0, duration=3845.572s, table=0, n_packets=2979, n_bytes=291942, idle_age=0, priority=100,in_port=2 actions=move:NXM_NX_TUN_ID[0..23]->OXM_OF_METADATA[0..23],move:NXM_NX_TUN_METADATA0[16..30]->NXM_NX_REG14[0..14],move:NXM_NX_TUN_METADATA0[0..15]->NXM_NX_REG15[0..15],resubmit(,33)

[root@worker2 ~]# ovs-ofctl dump-flows br-int table=65 | grep idle_age='[0-1],'

cookie=0x0, duration=3887.336s, table=65, n_packets=3062, n_bytes=297780, idle_age=0, priority=100,reg15=0x1,metadata=0x8 actions=output:8

I was filtering the dump-flows output to display only those flows with an idle_age of just 0 or 1 seconds. This means that they’ve been recently hit so if we’re inspecting tables with lots of flows, we would filter away unwanted flows to focus just on the ones that we’re really interested. Please, note that for this particular example, I’ve set the VM2 link down to see only incoming ICMP packets from VM1 and hide the replies.

Let’s inspect the input flow on table 0 where the following actions happen:

actions=

move:NXM_NX_TUN_ID[0..23]->OXM_OF_METADATA[0..23],

move:NXM_NX_TUN_METADATA0[16..30]->NXM_NX_REG14[0..14],

move:NXM_NX_TUN_METADATA0[0..15]->NXM_NX_REG15[0..15],

resubmit(,33)

These actions will:

- Copy the 24 bits of the VNI (Tunnel ID / Logical Datapath) to the OpenFlow metadata.

- Copy bits 16-30 of the tunnel data (Ingress port) to the OpenvSwitch register 14.

- Copy bits 0-15 of the tunnel data (Egress port) to the OpenvSwitch register 15.

From the ovn-architecture document, we can read:

logical datapath field

A field that denotes the logical datapath through which a

packet is being processed. OVN uses the field that Open‐

Flow 1.1+ simply (and confusingly) calls “metadata” to

store the logical datapath. (This field is passed across

tunnels as part of the tunnel key.)logical input port field

A field that denotes the logical port from which the

packet entered the logical datapath. OVN stores this in

Open vSwitch extension register number 14.Geneve and STT tunnels pass this field as part of the

tunnel key. Although VXLAN tunnels do not explicitly

carry a logical input port, OVN only uses VXLAN to commu‐

nicate with gateways that from OVN’s perspective consist

of only a single logical port, so that OVN can set the

logical input port field to this one on ingress to the

OVN logical pipeline.logical output port field

A field that denotes the logical port from which the

packet will leave the logical datapath. This is initial‐

ized to 0 at the beginning of the logical ingress pipe‐

line. OVN stores this in Open vSwitch extension register

number 15.Geneve and STT tunnels pass this field as part of the

tunnel key. VXLAN tunnels do not transmit the logical

output port field. Since VXLAN tunnels do not carry a

logical output port field in the tunnel key, when a

packet is received from VXLAN tunnel by an OVN hypervi‐

sor, the packet is resubmitted to table 8 to determine

the output port(s); when the packet reaches table 32,

these packets are resubmitted to table 33 for local de‐

livery by checking a MLF_RCV_FROM_VXLAN flag, which is

set when the packet arrives from a VXLAN tunnel.

Similarly, we can see that in the output action from table 65, the OpenFlow rule is matching on “reg15=0x1,metadata=0x8” when it outputs the packet to the OpenFlow port number 8 (VM2). As we saw earlier, metadata 8 corresponds to network2 Logical Switch and the output port (reg15) 1 corresponds to the VM2 logical port (tunnel_key 1).

Due to its distributed nature and everything being OpenFlow based, it may be a little bit tricky to trace packets when implementing Software Defined Networking with OVN compared to traditional solutions (iptables, network namespaces, …). Knowing the above concepts and getting familiar with the tools is key to debug OVN systems in an effective way.